Generative AI fundamentals: Exploring the 6-Layer architecture

Generative AI operates through a sophisticated six-layer architecture, with each layer dedicated to a unique aspect of the technology's functionality. This structured approach ensures a systematic development and deployment process, optimizing each component for its specific role. In the following sections, we will explore each layer in detail, providing a comprehensive understanding of how generative AI models are constructed and how they perform.

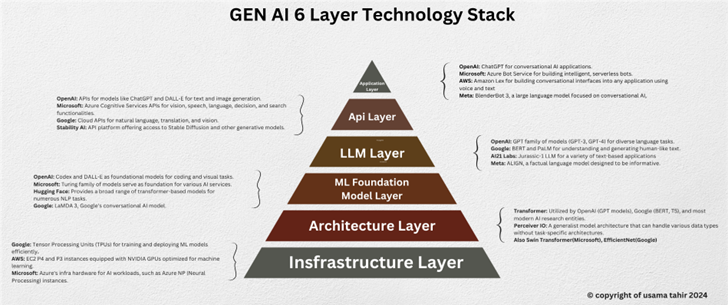

Image below: the six layers and tool stacks offered by various tech giants.

Layer 1: Infrastructure Layer

Overview

The Infrastructure Layer is the foundation for all generative AI models, encompassing the essential hardware and software resources required for their development, training, and deployment. Without a robust infrastructure, generative AI models would struggle with the computational demands, leading to slower development cycles and reduced performance.

Key Components

1. High-Performance Computing (HPC) Resources

● Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs): These specialized processors are critical for the parallel processing required to train large-scale neural networks quickly and efficiently.

● Central Processing Units (CPUs): While GPUs and TPUs handle specialized tasks, CPUs manage general-purpose processing, supporting the overall functionality of AI systems..

● Distributed Computing Systems: These systems allow the distribution of data and computational tasks across multiple nodes, facilitating the handling of large datasets and complex model training processes.

2. Data Storage Solutions

● Data Lakes: Centralized repositories that can store vast amounts of structured and unstructured data, providing flexibility in data management and retrieval.

● Databases: Both SQL and NoSQL databases are utilized to efficiently manage and retrieve large datasets, each serving different types of data and query requirements.

● Data Warehouses: These systems aggregate large volumes of historical data, which can be used for in-depth analysis and training of AI models.

3. Networking Components

● High-Speed Networks: Essential for rapid data transfer between storage systems and computational resources, minimizing latency and ensuring seamless data flow.

● Cloud Infrastructure: Provides scalable, on-demand resources, enabling flexible AI workloads and cost-effective resource management.

Layer 2: Architecture Layer

Overview

The Architecture Layer is crucial for the structural design of generative AI models. It involves selecting and configuring neural network architectures suited to specific generative tasks. This layer ensures that each component is optimized for its role, determining the capability and efficiency of the models in learning from data and generating new content.

Key Components

1. Transformers: Use Attention Mechanisms to manage dependencies between input and output data effectively, making transformers highly suitable for tasks such as natural language processing (NLP), text generation, and translation.

2. Generative Adversarial Networks (GANs): Two Competing Networks: Consist of a generator, which creates data, and a discriminator, which evaluates its authenticity. This competitive dynamic leads to the generation of high-quality synthetic data, useful in image generation and data augmentation.

Layer 3: ML Foundation Model Layer

Overview

This layer involves developing foundational machine learning models trained on vast datasets to understand underlying patterns. These models form the basis for more specialized applications, ensuring robust learning and generalization from data.

Key Components

1. Training Data

● Quality and Quantity: Access to high-quality, diverse datasets is crucial for training robust models that perform well across various tasks.

● Preprocessing: Techniques such as data cleaning, normalization, and augmentation are used to enhance model performance by preparing the data effectively.

2. Training Algorithms

● Supervised Learning: Models learn from labeled data.

● Unsupervised Learning: Models identify patterns and structures in unlabeled data, discovering hidden relationships.

● Semi-supervised and Self-supervised Learning: Combines elements of both supervised and unsupervised learning, often leveraging large amounts of unlabeled data with a smaller amount of labeled data.

3. Model Evaluation

● Metrics: Key performance indicators such as accuracy, precision, recall, and F1 score are used to evaluate model performance.

● Validation Techniques: Methods like cross-validation and hold-out validation ensure models generalize well to new data.

Layer 4: LLM (Large Language Model) Layer

Overview

This layer deals with the implementation of large language models like GPT. These models, trained on extensive text data, generate human-like text, understand context, and perform a wide range of language-related tasks, driving advancements in NLP.

Key Components

1. Model Architecture

● Transformer-based Models: These models utilize self-attention mechanisms to process text data efficiently.

● Variants: Different models like GPT, BERT, and T5 are optimized for various NLP tasks, each with unique strengths.

2. Training Data

● Text Corpora: Large-scale datasets such as Common Crawl and Wikipedia provide the diverse and extensive text needed for training.

● Diversity and Representation: Ensuring training data includes various topics, styles, and dialects to improve model generalization and fairness.

3. Fine-Tuning

● Task-Specific Adaptation: Adjusting pre-trained LLMs for specific tasks like translation, summarization, or question-answering.

● Transfer Learning: Leveraging pre-trained models to enhance performance on new, smaller datasets, making efficient use of previously learned knowledge.

Layer 5: API Layer

Overview

The API Layer provides standardized interfaces for accessing and utilizing generative AI models, enabling developers to integrate AI capabilities into their software products and services easily.

Key Components

1. RESTful APIs

● HTTP Methods: Using methods like GET, POST, PUT, and DELETE to interact with AI services.

● Endpoints: Defined URLs where AI services can be accessed, facilitating communication between client applications and AI models.

2. GraphQL APIs

● Flexible Queries: Allow clients to request precisely the data they need, enhancing efficiency and reducing data transfer overhead.

● Single Endpoint: All API interactions occur through a single endpoint, simplifying the integration process.

3. SDKs

SDKs are available for various programming languages (e.g., Python, JavaScript), making it easier for developers to integrate AI functionalities.

Layer 6: Application Layer

Overview

The Application Layer involves deploying generative AI models in real-world scenarios across various industries, solving specific problems, and enhancing productivity. This layer showcases the tangible benefits of generative AI, demonstrating its value in improving efficiency and driving innovation.

Key Components

1. Content Creation Applications

● Text Generation: Automated writing assistants, content generators.

● Image and Video Creation: Tools for generating artwork, animations, and synthetic videos.

2. Healthcare Applications

● Medical Imaging: Enhanced analysis and diagnosis tools.

● Drug Discovery: Generating novel compounds and predicting their efficacy.

3. Customer Service Applications

● Chatbots: AI-powered virtual assistants providing real-time support.

● Voice Assistants: Natural language interfaces for customer interaction.

What’s Next :

After identifying these components, we will delve deeper into each process in this series of blogs on Gen AI. They will cover from all the way to data collection, preprocessing, model architecture design, training, fine-tuning, reinforcement learning from human feedback (RLHF), the retrieval-augmented generation (RAG) approach, and model evaluation. We'll also give a high-level overview of the layers and their key components. Stay tuned as we explore each step-in detail to understand LLM development comprehensively.

Read the previous blog about Embracing Generative AI: A Comprehensive Guide for Professionals.

Author:

Usama Tahir, Full Stack Developer